Building NetCDF for HPC

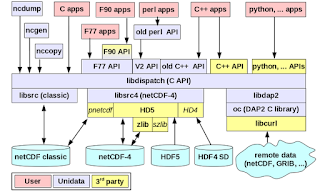

Building netCDF from scratch, on a High Performance Computing (HPC) platform is a challenge. There are a lot of other libraries involved. This diagram shows all the possible 3-rd party libraries in a netCDF build (the yellow boxes):

Building the netCDF library from source is not usually necessary for most users.

On most HPC systems, netCDF will already be install somewhere. Contact your sysadmins and ask how to use it.

Sometimes, you need to build from source, because:

Why Build from Source?

Building the netCDF library from source is not usually necessary for most users.

On most HPC systems, netCDF will already be install somewhere. Contact your sysadmins and ask how to use it.

Sometimes, you need to build from source, because:

- You need the latest version, and the sysadmins haven't installed it.

- You need some combination of tools and versions that is not already installed.

- You are the sysadmin, and you have to build the libraries so that all your users don't have to.

- You want to have full control and understanding of the build process.

Building with Autotools

The libraries we need to build all have standard "autotools" build systems.This means that the developers use autoconf/automake/libtool to give users a tarball that can be built on a wide variety of platforms. Any platform that supports C99 can build these libraries. The way to unpack, configure, build, and install is the same for each of the libraries.

Unpacking

Each library comes packaged in a "tarball", which is a standard Unix tarfile, compressed with gzip or bzip.

Each library has a website which has the tarballs for all releases. Download the tarball for the release version you want. Use the tar command to unpack the file. For example, download mpich-3.2.tar.gz, and unpack it like this:

tar zxf mpich-3.2.tar.gz

Configuring

Now change to the directory created by the tarball:

cd mpich-3.2In that directory, run the script called "configure". (But first set an environment variables that might be needed to make the compile work.) For example:

./configure --prefix=/home/ed/local

The Prefix Directory

The directory provided in the prefix argument is important. This is where the library will be installed, when you later type "make install". The default is "/use/local". In the above example, I have specified "/home/ed/local" instead.This directory is used when the library is installed (with "make install" - see below). If the directory doesn't exist, it will be created. Under this directory the package will create subdirectories: "lib", "include", and perhaps others. The compiled library will end up in the lib directory, the include file in the include directory.

Frequently you will want to override the default prefix and provide your own. Reasons include:

- su authority is needed to install to /usr/local, and you don't have it.

- you are keeping different versions of the same library.

- you're installing parallel and non-parallel versions of the library.

- you're installing different versions of the library with different configuration options.

In the sample commands below, I install each library into its own directory, with the MPI library name and version, plus the library version, in the install directory name. That gives me directory names like "/usr/local/hdf5-1.10.1_mpich-3.2".

It is simpler to install everything under one directory, if you are not maintaining multiple builds of the same library. In that case, I would use "/home/ed/local", and install everything there.

It is simpler to install everything under one directory, if you are not maintaining multiple builds of the same library. In that case, I would use "/home/ed/local", and install everything there.

Order of Library Install

The libraries must be built and installed in the correct order:

- MPICH

- gzip

- szip

- HDF5

- netCDF

Debugging Problems

The configure script produces a file called config.log. This is a valuable tool in debugging build problems.

Configure works by performing a bunch of tests on your machine, to see what it can and can't do. You can see some output from these tests as configure runs. In those tests is a test whether the zlib library can be found, for example. There is at least one test for each of the libraries listed above.

The config.log file is very large, but the most important part is the logged output from all these tests.

If your compile or configure ends with a complaint about a library, like it can't find HDF5, then go to config.log and do a text search for HDF5. You will find the section of the file where HDF5 tests were run, and you can see the results of the tests.

This will usually tell you where the problem lies. Frequently it is a mistake in CPPFLAGS or LDFLAGS, and so configure cannot find either the header file or the library to link to.

Build Commands

Here are the build commands I used:MPICH

cd mpich-3.2 && ./configure --prefix=/usr/local && make all check

&& sudo make install

zlib

CC=mpicc ./configure --prefix=/usr/local/zlib-1.2.11_mpich-3.2

make all check

sudo make install

szlib

CC=mpicc ./configure --prefix=/usr/local/szip-2.1_mpich-3.2

make all check

sudo make install

HDF5

CC=mpicc ./configure --with-zlib=/usr/local/zlib-1.2.11_mpich-3.2 --with-szlib=/usr/local/szip-2.1_mpich-3.2 --prefix=/usr/local/hdf5-1.10.1_mpich-3.2 --enable-parallel

make all check

sudo PATH=$PATH:/usr/local/bin make install

NetCDF

CPPFLAGS='-I/usr/local/zlib-1.2.11_mpich-3.2 -I/usr/local/szip-2.1_mpich-3.2/include -I/usr/local/hdf5-1.10.1_mpich-3.2/include' LDFLAGS='-L/usr/local/zlib-1.2.11_mpich-3.2/lib -L/usr/local/szip-2.1_mpich-3.2/lib -L/usr/local/hdf5-1.10.1_mpich-3.2/lib' CC=mpicc ./configure --enable-parallel-tests --prefix=/usr/local/netcdf-c-4.5.0-rc1_mpich-3.2

make all check

sudo make install

Postscript

One reader has pointed out the new tool spack. This very interesting project attempts to automate the install of tools, with ways to control versions, include other libraries, and name configurations.

Perhaps I will give spack a try in the future.

Thanks for the post. Do you know how to build netCDF with HDF4 support?

ReplyDeleteYes, to build with HDF4 support, install the HDF4 library somewhere, and add that as an -I option to CPPFLAGS, and a -L option to LDFLAGS, when building netCDF. And then build netCDF with --enable-hdf4.

ReplyDeleteYou will see in the netCDF configure output whether it finds the HDF4 include file and library. If you have set your CPPFLAGS and LDFLAGS correctly, it will find HDF4.

NetCDF will then be able to read HDF4 SD files as if they were netCDF. Writing to HDF4 is not supported.

Up until now, I've never thought to build NetCDF and its associated libraries (e.g. zlib, szip, hdf5) with mpi/mpich ... does this enable parallelism in netCDF? I thought in order to do that, one needed to separately build the parallel-netcdf library.

ReplyDeleteBuilding the toolchain with mpich (or other MPI compiler) does enable parallel IO for netCDF-4/HDF5 files.

DeleteNetcdf can also be built *with* parallel-netcdf (using the --enable-pnetcdf flag to netCDF's configure). In this case, netCDF will also be able to provide parallel access to classic, 64-bit offset, and CDF5 files.

All of the above uses the netCDF API.

Or, you can use parallel-netcdf in stand-alone mode (i.e. without netCDF). In this case you can get parallel IO for classic, 64-bit offset, and CDF5. NetCDF-4/HDF5 files are not supported in any way by parallel-netcdf. Parallel-netcdf has it's own API, which mirrors the netCDF API closely. But netCDF code must be changed (a little) to work with parallel-netcdf directly.

Thanks! That's very useful info!

DeleteThanks, very useful article. You should include MPI into your figure. On HPC, a different compiler and MPI toolchain, sometimes incompatible with gcc, are often the reason one needs to install netcdf from scratch.

ReplyDeleteTalking HPC, the fortran library of netcdf is often needed as well. Why is the F90-API mentioned as 3rd party? I usually download it from https://www.unidata.ucar.edu/downloads/netcdf/index.jsp

The F90 library is listed as 3rd party because it originated outside Unidata. But it has become the netCDF F90 standard, and has been distributed by Unidata for over a decade now. So I agree it is not really an external library.

Delete